rlberry.manager.evaluate_agents¶

- rlberry.manager.evaluate_agents(experiment_manager_list, n_simulations=5, choose_random_agents=True, fignum=None, show=True, plot=True)[source]¶

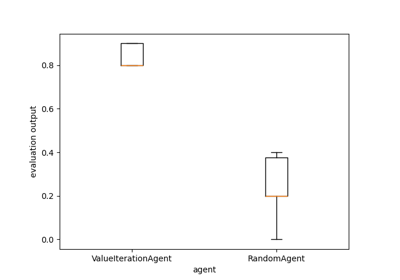

Evaluate and compare each of the agents in experiment_manager_list.

- Parameters:

- experiment_manager_list: list of ExperimentManager objects.

- n_simulations: int

Number of calls to the eval() method of each ExperimentManager instance.

- choose_random_agents: bool

If true and n_fit>1, use a random fitted agent from each ExperimentManager at each evaluation. Otherwise, each fitted agent of each ExperimentManager is evaluated n_simulations times.

- fignum: string or int

Identifier of plot figure.

- show: bool

If true, calls plt.show().

- plot: bool

If false, do not plot.

- Returns:

- dataframe with the evaluation results.

Examples

>>> from rlberry.agents.stable_baselines import StableBaselinesAgent >>> from stable_baselines3 import PPO, A2C >>> from rlberry.manager import ExperimentManager, evaluate_agents >>> from rlberry.envs import gym_make >>> import matplotlib.pyplot as plt >>> >>> if __name__=="__main__": >>> names = ["A2C", "PPO"] >>> managers = [ ExperimentManager( >>> StableBaselinesAgent, >>> (gym_make, dict(id="Acrobot-v1")), >>> fit_budget=1e5, >>> agent_name = names[i], >>> eval_kwargs=dict(eval_horizon=500), >>> init_kwargs= {"algo_cls": algo_cls, "policy": "MlpPolicy", "verbose": 0}, >>> n_fit=1, >>> seed=42, >>> ) for i, algo_cls in enumerate([A2C, PPO])] >>> for manager in managers: >>> manager.fit() >>> data = evaluate_agents(managers, n_simulations=50)